How AI Marking Actually Works: A Teacher's Guide

"AI marking" can sound like a black box. You upload a paper, and a grade comes out. But how? Is it just guessing? Is it reliable? As an educator, it's right to be skeptical. Let's break down exactly what's happening under the hood.

Key Takeaway:

Modern AI marking isn't just "spell check on steroids." It uses **Large Language Models (LLMs)**—the same technology behind tools like ChatGPT—to read, understand, and analyze student work against your specific rubric, just as a human assessor would.

1. The "Brain": Large Language Models (LLMs)

The biggest shift in AI marking came with the rise of Large Language Models (LLMs). Before LLMs, AI grading was very rigid. It could only check for keywords, sentence length, and grammar. It couldn't understand the *meaning* or *quality* of an argument.

LLMs are different. They are trained on billions of sentences, articles, and books. This training allows them to understand context, nuance, tone, and complex ideas. When an LLM reads a student's essay, it's not just counting words; it's building a sophisticated understanding of what the student is trying to say.

2. The "Instructions": Rubric Alignment

An LLM on its own doesn't know how to grade. It knows how to read and write. To be useful for marking, it needs your instructions. This is where the **rubric** comes in.

When you provide a rubric, you are giving the AI a set of rules. Your prompt to the AI is essentially: "Read this paper, then evaluate it *only* based on these criteria."

Example: How a Rubric Works

- Your Rubric Says: "Analysis (10 marks): The student must clearly explain the causes of the event and provide supporting evidence."

- The AI's Process:

- The AI scans the entire text to identify sections that discuss "causes."

- It then looks for connecting phrases (e.g., "this led to," "because of," "as a result") to see if the student is *explaining* or just *listing* facts.

- Finally, it searches for supporting evidence (quotes, dates, statistics) near those explanations.

- It compares what it found to your rubric's "A-grade" descriptor and provides a score and a comment based on that match.

3. The Process: From Upload to Feedback

When you use a tool like Feedback Flows, the process looks like this:

- Text Extraction: Your file (PDF, .docx) is read, and all the text, formatting, and structure are extracted.

- Prompt Engineering: Your student's text is combined with your rubric and a complex, pre-built "system prompt." This master prompt, which is refined by our team, instructs the AI on how to behave (e.g., "You are a fair and helpful teacher. Your feedback must be constructive...").

- AI Analysis: This entire package (prompt + rubric + student text) is sent to the LLM. The AI reads it all at once and generates its analysis, including feedback, comments, and scores for each criterion.

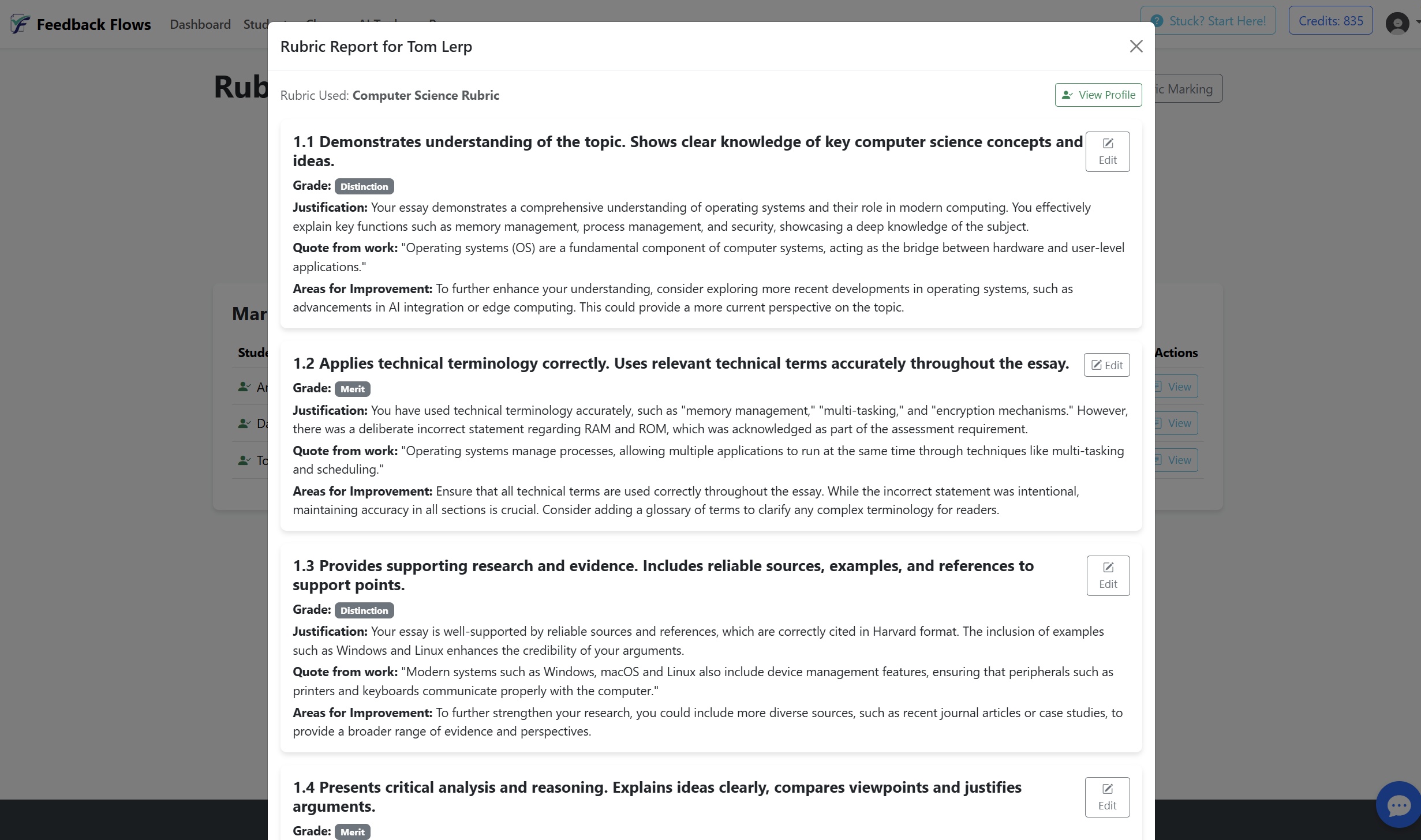

- Display: The AI's raw response (which is just a block of text) is received by our platform, parsed, and displayed to you in a clean, easy-to-read format.

Common Questions from Teachers

Is it just plagiarism or AI detection?

No. While some tools do that, true AI marking is focused on *quality*, not just *originality*. A paper could be 100% original, AI-free, and still get a low mark if the argument is weak. Feedback Flows' AI detection and stylometric analysis are separate tools used to ensure academic integrity, but they are not the "marker" itself.

Is it reliable? Can I trust the grades?

This is the most important question. AI is incredibly consistent, which removes human bias. It will never grade a paper more harshly because it's tired or had a bad day. However, it can sometimes misunderstand a very niche or creative argument.

That's why we always refer to AI-generated grades as **indicative**. The AI's job is to do 90% of the heavy lifting for you—catching all the grammar, checking the rubric, and writing the summary feedback. Your job, as the professional educator, is to do the final 10% review, validate the grade, and apply your ultimate judgment. It's a partnership, not a replacement.

What about student privacy?

This is critical. Reputable platforms like Feedback Flows do not use student data to train AI models. Submissions are processed and then deleted. Always check the privacy policy of any AI tool you use.

Ready to see it in action?

Try our AI marking tools for free. Get immediate credits on sign-up and see how it works on your own students' papers.

Get Started for Free